MIT engineers have created a robot that finds specific things buried beneath other items. It proved to be a complex task, but new algorithms dubbed FuseBot have successfully demonstrated the ability to reason and sort through objects looking for the most likely hiding place.

The motivation behind the research project is the proliferation of RFID technology in particular in the retail and warehouse industries. It's based on a previous robotic arm that used visual information and radio frequency signals to search for objects that were tagged with RFIDs. The new system does not need all items to be tagged, just some of them. It’s quick too. Senior researcher Fadel Adib, associate professor in the Department of Electrical Engineering and Computer Science and director of the Signal Kinetics group in the Media Lab says, “This speed could be especially useful in an e-commerce warehouse. A robot tasked with processing returns could find items in an unsorted pile more efficiently with the FuseBot system. What this shows, for the first time, is that the mere presence of an RFID-tagged item in the environment makes it much easier for you to achieve other tasks in a more efficient manner. We were able to do this because we added multimodal reasoning to the system — FuseBot can reason about both vision and RF to understand a pile of items.”

The robotic arm uses an attached video camera and RF antenna to retrieve an untagged target item from a mixed pile. The system scans the pile with its camera to create a 3D model of the environment. Simultaneously, it sends signals from its antenna to locate RFID tags. These radio waves can pass through most solid surfaces. If the target item is not tagged, FuseBot knows the item cannot be located at the exact same spot as an RFID tag.

Algorithms fuse this information to update the 3D model of the environment and highlight possible locations of the target item, the robot is preprogrammed with its size and shape. Then the system reasons about the objects in the pile and RFID tag locations to determine which item to remove, with the goal of finding the target item with the fewest moves.

The robot is unsure how objects are oriented under the pile, or how a soft item might be deformed by heavier items pressing on it. It overcomes this with probabilistic reasoning, using what it knows about the size and shape of an object and its RFID tag location to model the 3D space that object is likely to occupy.

As it removes items, it also uses reasoning to decide which item would be best to remove next. After it removes an object, the robot scans the pile again and uses new information to refine its strategy.

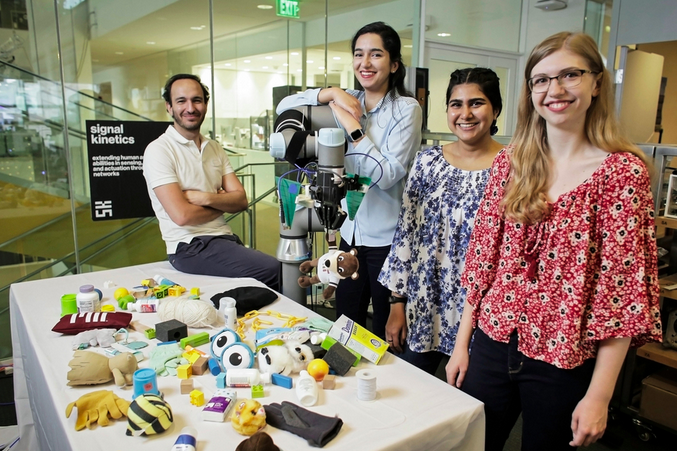

The team ran more than 180 experimental trials using real robotic arms and piles with household items, like office supplies, stuffed animals and clothing. They varied the sizes of piles and number of RFID-tagged items in each run.

FuseBot extracted the target item successfully 95% of the time, compared to 84% for the previous robotic system. It accomplished this using 40% fewer moves, and was able to locate and retrieve targeted items more than twice as fast.

For the next step of the project, the researchers are planning to incorporate more complex models into FuseBot so it performs better on deformable objects. Then, they are keen to explore different manipulations, like pushing items out of the way. Future iterations could also be used with a mobile robot that searches multiple piles for lost objects.